Olivia (Simin) Fan

❀Welcome to Olivia's WonderLand 🥕

Wish you a nice day! :)

❀Welcome to Olivia's WonderLand 🥕

Wish you a nice day! :)

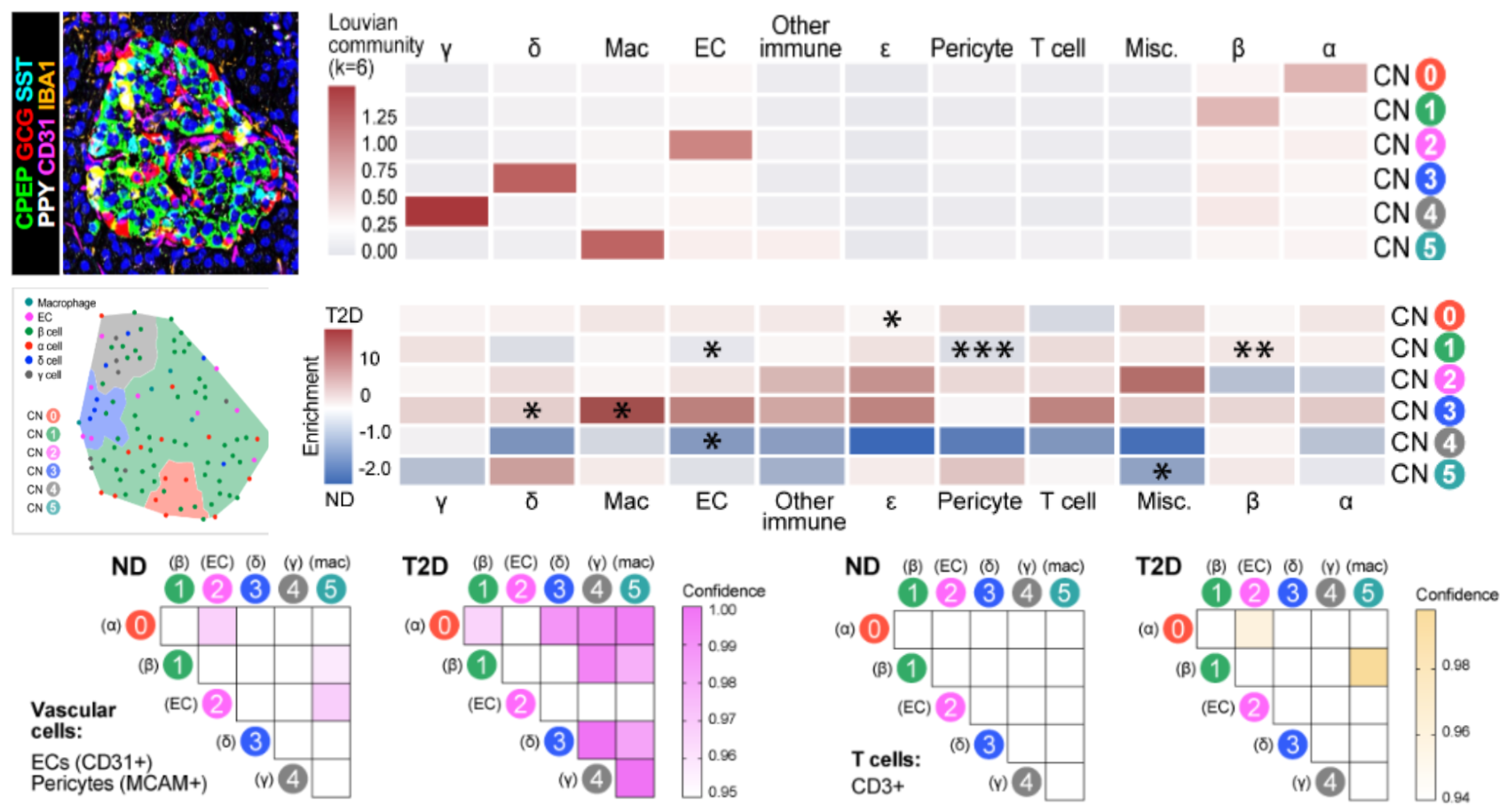

Walker JT, Saunders DC, Rai V, Dai C, Orchard P, Hopkirk AL, Reihsmann CV, Tao Y, Fan S, Shrestha S, Varshney A, Wright JJ, Pettway YD, Ventresca C, Agarwala S, Aramandla R, Poffenberger G, Jenkins R, Hart NJ, Greiner DL, Shultz LD, Bottino R, Liu J, Parker SC, Powers AC, Brissova M. [Nature 2023]

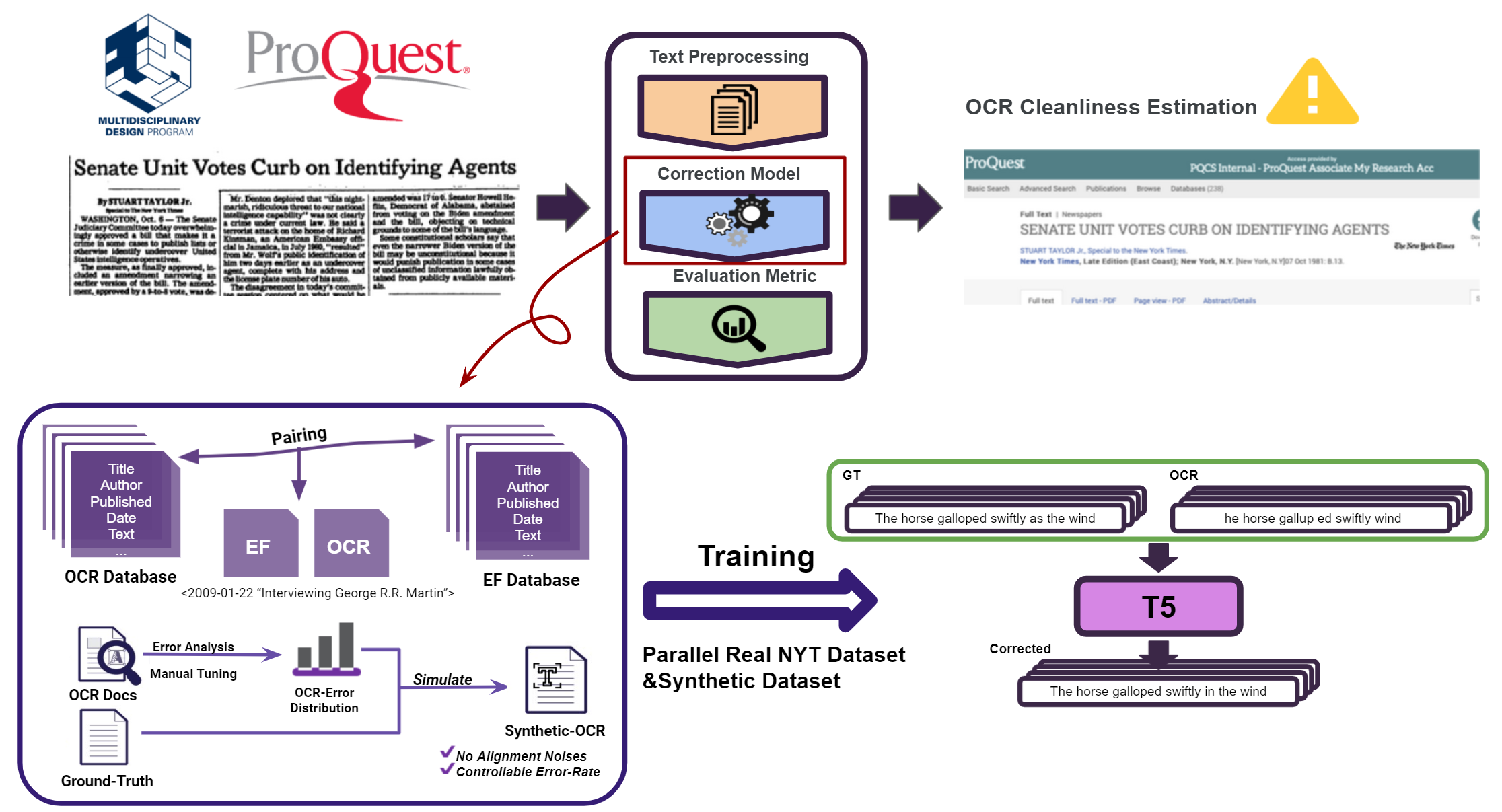

Instructor: Prof. Sindhu Kutty (UMICH)

Sponsors: Dr. John Dillon, Dr. Dan Hepp(ProQuest)

[Multi-disciplinary Project with ProQuest 2022]

I host open brainstorm hours for discussing research ideas, career advice, or just casual conversations about ML/AI. Feel free to book a 30-minute coffee chat with me! Whether you're a fellow researcher, student, or just curious about my work, I'd love to connect and exchange ideas. 💭☕